Introduction

We are drowning in planetary data like daily satellite snaps of the Earth, sensor samples of air and water every minute, wildlife info through cameras every second, and climate records that stretch back decades. Hidden in that flood are the weak signals that tell us where forests are thinning, where rivers are stressed, where species are slipping, and where emissions are quietly rising.

In this short post I highlight advancements in machine learning that improve our understanding and approach toward environmental activities, detecting environmental degradation, as well as guiding prevention, mitigation, and restoration.

Deforestation and Land Degradation – Eyes in the Sky

Feed satellite imagery such as Landsat, Sentinel, PlanetScope into computer vision models to map what is on the ground and how it changes week to week.

Texture and canopy geometry are learnable. Computer vision models like CNNs, when trained on imagery, can tell natural forest from regimented rows of plantations and temporal models can flag new clearings under intermittent cloud cover.

Core techniques

- U-Nets segment imagery into classes (intact forest, plantation, cropland, bare soil).

- Temporal models (1D CNN/LSTM/Transformers) learn change over time across image stacks.

- Weak supervision for places with few labels and active learning to prioritize expert review.

- Metrics integrated into training and evaluation: IoU/mIoU for segmentation quality, user’s accuracy (precision/recall per class), and time-to-detection for new clearings.

Near-real-time alerts move enforcement from “after the fact” to “on the way”. On the restoration side, models rank sites by likelihood of success (soil, slope, climate, proximity to seed sources), so tree planting budgets go where survival odds are highest.

Air Pollution and Emissions – From Patchy Sensors to Full Maps

Blend ground stations, low-cost sensors, meteorology, and satellites to estimate and forecast pollution at street to city scale. Physics provides direction (wind, boundary layer effects), while ML learns the residuals (local quirks and nonlinearities) so maps fill in the blanks where monitors do not exist. Public resources such as EPA’s Air Quality System and OpenAQ supply valuable ground-truth data that complement remote sensing inputs.

Core techniques

- Spatiotemporal fusion (gradient boosting or graph neural nets) merges sparse monitors with satellite NO2/PM columns.

- Sequence models (LSTM/Temporal Fusion Transformer) forecast PM2.5/ozone 6-72 hours out. Metrics: RMSE/MAE, R^2 on held-out time periods.

- Vision models detect plumes and facility activity to infer plant-level emissions.

Cities get earlier health advisories. Regulators see which facilities actually drive spikes, methane super-emitters are flagged for repair. It is accountability with pixels and timestamps.

Water Quality – Reading Lakes and Rivers

Multispectral satellite imagery from NASA OceanColor and Landsat/Sentinel provides surface reflectance used to estimate chlorophyll-a and turbidity, while in-situ measurements from USGS Water Data track dissolved oxygen, pH, and contaminants in rivers and lakes. Together, these datasets give an informative view of water health across scales. Machine learning links these sources to highlight patterns such as harmful algal blooms, turbidity spikes, or treatment plant anomalies, making early detection possible.

Core techniques

- Multispectral regression with CNNs to map chlorophyll/turbidity from Sentinel-2/Landsat. Metrics: RMSE/MAPE for concentration estimates compared to lab samples.

- Autoencoders and isolation forests on treatment-plant sensors to catch anomalies. Metrics: precision/recall for anomaly alarms, time-to-detection.

- Physics-informed nets to respect mass-balance and known optical properties, improving generalization across basins. Metrics: calibration scores and cross-basin transfer performance.

Utilities act hours earlier, watershed managers trace problems to upstream land use, and early algal bloom detection prevents fish kills and toxins in taps.

Climate – From Coarse Models to Local Decisions

Climate data spans decades and comes from global reanalysis products, long-term station records, and gridded datasets. Resources such as Copernicus Climate Data Store and Daymet provide open access to climate variables including temperature, precipitation, radiation, and humidity at varying spatial and temporal resolutions. These physical datasets form the backbone for machine learning models that highlight patterns, improve resolution, and detect anomalies in climate behavior.

Core techniques

- Bias-corrected statistical downscaling with gradient boosting/transformers to turn 50-100 km climate grids into neighborhood-scale projections. Metrics: skill scores (CRPS, correlation) on withheld years.

- Extreme event detection via anomaly models on reanalysis and remote sensing. Metrics: ability to capture tail events, measured by precision/recall on extreme events.

- Causal ML to separate signal from confounders when evaluating interventions.

Cities can identify heat-resilient neighborhoods for tree cover, utilities plan for compound drought-and-demand risks, and conservationists highlight climate safe zones worth protecting.

From Detection to Cure

Prioritizing restoration. Rank degraded sites by “restoration ROI”: probability of survival + expected carbon + biodiversity uplift - cost/constraints. This is a classic multi-objective optimization problem.

Targeting enforcement. Fuse alerts (forest loss + road proximity + past incidents) and schedule ranger routes with reinforcement learning. Reward = prevented loss, penalize travel cost.

Optimizing pollution controls. In plants and buildings, model predictive control with learned surrogates trims energy/emissions while meeting constraints (for example, comfort and effluent quality).

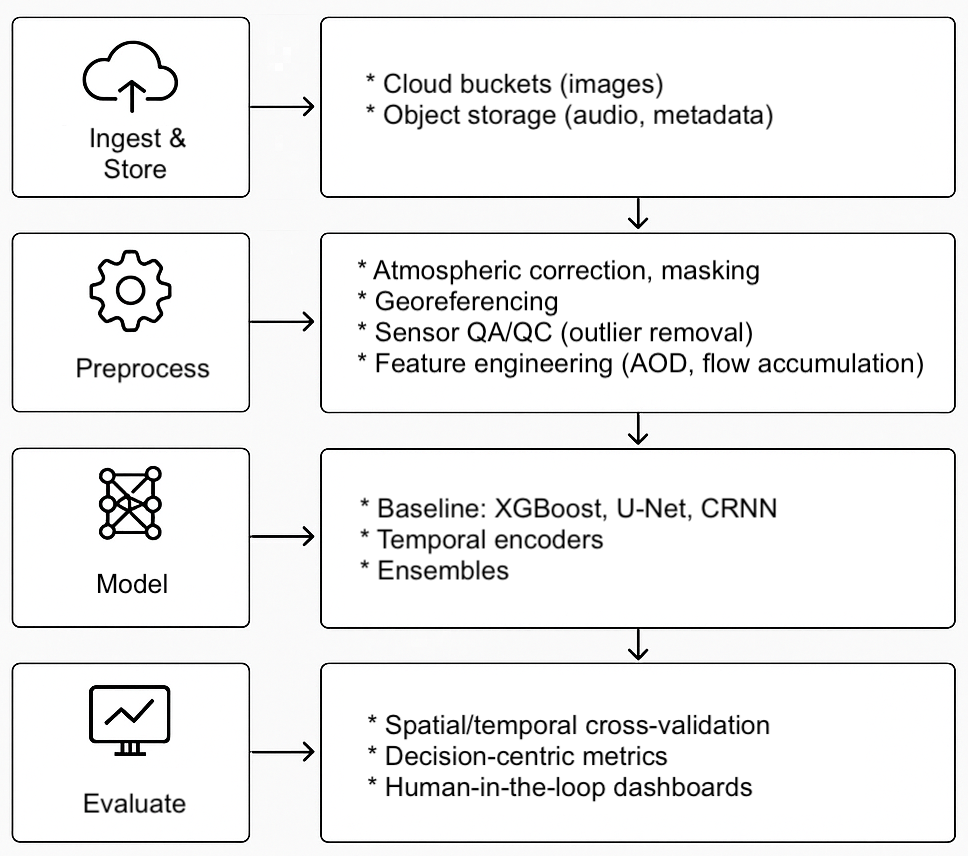

A Minimal, Repeatable Technical Stack

Environmental ML solutions involve a repeatable pipeline, from raw inputs to models and decisions that keeps projects organized and scalable. Below is a flow diagram that captures the typical journey from raw datasets to actionable outputs.

Conclusion

Environmentalism needs more than passion. It needs precision. Machine learning gives us precision at planetary scale: seeing small changes early, steering scarce resources wisely, and proving what works with data.

Building tools that help a ranger leave the station sooner, a plant operator tweak a setting earlier, a policymaker cut the right ton of emissions, a community breathe cleaner air next month (not in the next decade). That is the bar. And it is absolutely within reach.